A magazine where the digital world meets the real world.

On the web

- Home

- Browse by date

- Browse by topic

- Enter the maze

- Follow our blog

- Follow us on Twitter

- Resources for teachers

- Subscribe

In print

What is cs4fn?

- About us

- Contact us

- Partners

- Privacy and cookies

- Copyright and contributions

- Links to other fun sites

- Complete our questionnaire, give us feedback

Search:

Zooming in on music

Digital music collections, both personal and professional, are constantly growing. It's getting increasingly difficult to find the track or sample you want amongst the tens of thousands you own, never mind the millions of titles in online music stores. And how on earth do you browse music for particular sounds? Are we doomed to constant frustration, poring through lists and lists of tracks failing to find songs we know are in there somewhere? Perhaps not.

Spot that tune

At the moment, the main way of searching collections is based on textual information added to the tracks that describe them. This text is known as 'metadata'. It means you can search for keywords in that textual information and get Google-like lists of results back - you are just doing text searches. The trouble is the searches can only be as good as the metadata that has been added.

A more interesting approach is to actually search the sounds based on analysing the audio data itself - search the music not the text that has been written about it. Over the last decade, algorithms have been developed that can automatically detect the rhythm, tempo, genre or mood of a song, for example. This is known as 'semantic audio feature extraction'. Once you can detect these features of music you can use them to work out how similar two pieces of music are. That can then be the basis of a search system where you search for tracks similar to a sample you have already, browsing through the results. This approach can also be used in music recommendation systems to suggest new tracks similar to one you already like.

Exploring bubbles

Having broken away from searching text comments, why not get rid of those lists of results too? That is what the company Spectralmind's 'sonarflow' does. Spectralmind is a spinout company of Vienna University of Technology turning the university's research into useful products by combining work on the analysis of music with innovative ways of visualising it.

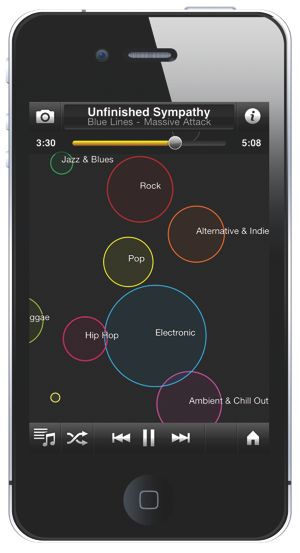

In particular what sonarflow does is provide a way to browse tracks of music visually based on how similar they sound. When you start to browse you are given a visual overview of the available content shown as "bubbles" that roughly cluster the tracks into groups reflecting musical genres. Similar music is automatically clustered into groups but those groups are clustered into groups themselves, and so on, so once you have chosen a genre you can zoom into it to reveal ever more bubbles that group the tracks with finer and finer distinctions until eventually you get to the individual tracks. Any available textual information (i.e., metadata) about the music is also clustered with the bubbles to enrich the browsing experience and provide navigation hints.

At any time immediate playback of the objects is possible at any level. This means that you can choose between playing individual tracks or entire clusters of music with a similar sound or mood. Sonarflow can also be used to recommend new music because it is easy to explore the neighbourhood of well-known songs to discover new titles that have a similar sound.

All the problems may not have been solved as searching through millions of versions of anything is never going to be easy, never mind when you can't see the thing you are looking for, but by combining fun ways to view music with clever ways of recognizing the audio features, searching through those endlessly frustrating lists may soon be a thing of the past.