A magazine where the digital world meets the real world.

On the web

- Home

- Browse by date

- Browse by topic

- Enter the maze

- Follow our blog

- Follow us on Twitter

- Resources for teachers

- Subscribe

In print

What is cs4fn?

- About us

- Contact us

- Partners

- Privacy and cookies

- Copyright and contributions

- Links to other fun sites

- Complete our questionnaire, give us feedback

Search:

Letters from the Victorian Smog

by Paul Curzon, Queen Mary University of London

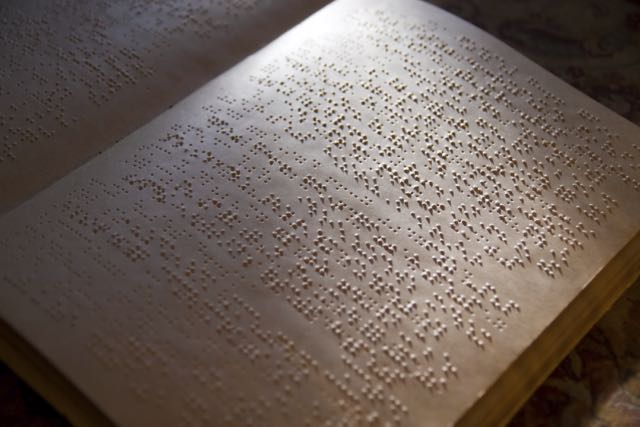

We take for granted that computers use binary: to represent numbers, letters, or more complicated things like music and pictures...any kind of information.That was something Ada Lovelace realised very early on. Binary wasn't invented for computers though. Its first modern use as a way to represent letters was actually invented in the first half of the 19th century. It is still used today: Braille.

Braille is named after its inventor, Louis Braille. He was born 6 years before Ada though they probably never met as he lived in France. He was blinded as a child in an accident and invented the first version of Braille when he was only 15 in 1824 as a way for blind people to read. What he came up with was a representation for letters that a blind person could read by touch.

Choosing a representation for the job is one of the most important parts of computational thinking. It really just means deciding how information is going to be recorded. Binary gives ways of representing any kind of information that is easy for computers to process. The idea is just that you create codes to represent things made up of only two different characters: 1 and 0. For example, you might decide that the binary for the letter 'p' was: 01110000. For the letter 'c' on the other hand you might use the code, 01100011. The capital letters, 'P' and 'C' would have completely different codes again. This is a good representation for computers to use as the 1's and 0's can themselves be represented by high and low voltages in electrical circuits, or switches being on or off.

The first representation Louis Braille chose wasn't great though. It had dots, dashes and blanks - a three symbol code rather than the two of binary. It was hard to tell the difference between the dots and dashes by touch, so in 1837 he changed the representation - switching to a code of dots and blanks. He had invented the first modern form of writing based on binary.

Braille works in the same way as modern binary representations for letters. It uses collections of raised dots (1s) and no dots (0s) to represent them. Each gives a bit of information in computer science terms. To make the bits easier to touch they're grouped into pairs. To represent all the letters of the alphabet (and more) you just need 3 pairs as that gives 64 distinct patterns. Modern Braille actually has an extra row of dots giving 256 dot/no dot combinations in the 8 positions so that many other special characters can be represented. Representing characters using 8 bits in this way is exactly the equivalent of the computer byte.

Modern computers use a standardised code, called Unicode. It gives an agreed code for referring to the characters in pretty well every language ever invented including Klingon! There is also a Unicode representation for Braille using a different code to Braille itself. It is used to allow letters to be displayed as Braille on computers! Because all computers using Unicode agree on the representations of all the different alphabets, characters and symbols they use, they can more easily work together. Agreeing the code means that it is easy to move data from one program to another.

The 1830s were an exciting time to be a computer scientist! This was around the time Charles Babbage met Ada Lovelace and they started to work together on the analytical engine. The ideas that formed the foundation of computer science must have been in the air, or at least in the Victorian smog.